This article analyzes the approaches of AI industry frameworks to measuring AI maturity.

Many organizations are rapidly expanding their use of AI while regulatory expectations continue to intensify. Parts 1–3 of this series examined how regulators define responsible and trustworthy AI and how these definitions translate into legal and supervisory obligations. From Part 4 onward, the focus shifted to industry AI frameworks, exploring how organizations can translate regulatory expectations into practical governance, strategy, and implementation approaches.

Part 4 analyzed differences in framework coverage and scope, highlighting contrasting assumptions about where AI governance starts and how AI strategy is shaped.

Part 5 builds on this foundation by focusing on AI maturity measurement, examining how industry frameworks define, structure, and assess organizational AI maturity.

The goals of this article are to:

- Analyze how industry frameworks conceptualize AI maturity measurement

- Compare maturity dimensions and maturity level definitions

- Clarify what these approaches mean for governance, prioritization, and regulatory alignment

AI Maturity Models and Measurement Dimensions

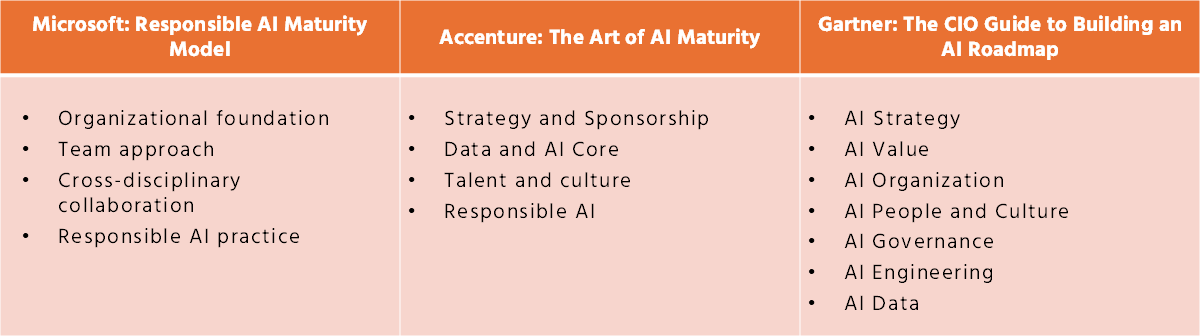

Table 1 provides a comparative overview of the high-level AI maturity dimensions disclosed by three industry authorities:

- Microsoft – Responsible AI Maturity Model

- Accenture – The Art of AI Maturity

- Gartner – The CIO Guide to Building an AI Roadmap

Table 1. The comparison of AI maturity measurement dimensions.Although the terminology used by these frameworks differs, the maturity dimensions can be aligned based on their underlying focus.

There are several common categories in the maturity dimensions

AI organization and governance foundations

One recurring category relates to AI organization and governance foundations. Microsoft refers to this area as Organizational Foundation, Accenture emphasizes Strategy and Sponsorship, and Gartner distributes these elements across AI Strategy, AI Values, AI Organization, and AI Governance. Across all three approaches, this category addresses leadership commitment, ethical positioning, governance structures, and decision-making mechanisms required for AI adoption at scale.

AI teams, skills, and culture

A second category concerns AI teams, skills, and culture. Microsoft highlights team approaches and cross-disciplinary collaboration, Accenture focuses on talent strategy and cultural readiness, and Gartner refers broadly to People and Culture. These dimensions collectively address the organizational conditions needed to develop, deploy, and sustain AI responsibly.

AI practices and core value-delivering capabilities

The third category covers AI practices and core value-delivering capabilities. Microsoft emphasizes responsible AI practices and governance principles such as accountability, transparency, and risk management. Accenture adopts a broader operational perspective, including data and AI platforms, build-versus-buy decisions, and data governance. Gartner groups these elements under AI Engineering and AI Data, presenting a more technical but less detailed operational view.

Despite structural differences, Table 1 reveals a consistent underlying maturity logic centered on governance foundations, organizational readiness, and operational AI practices.

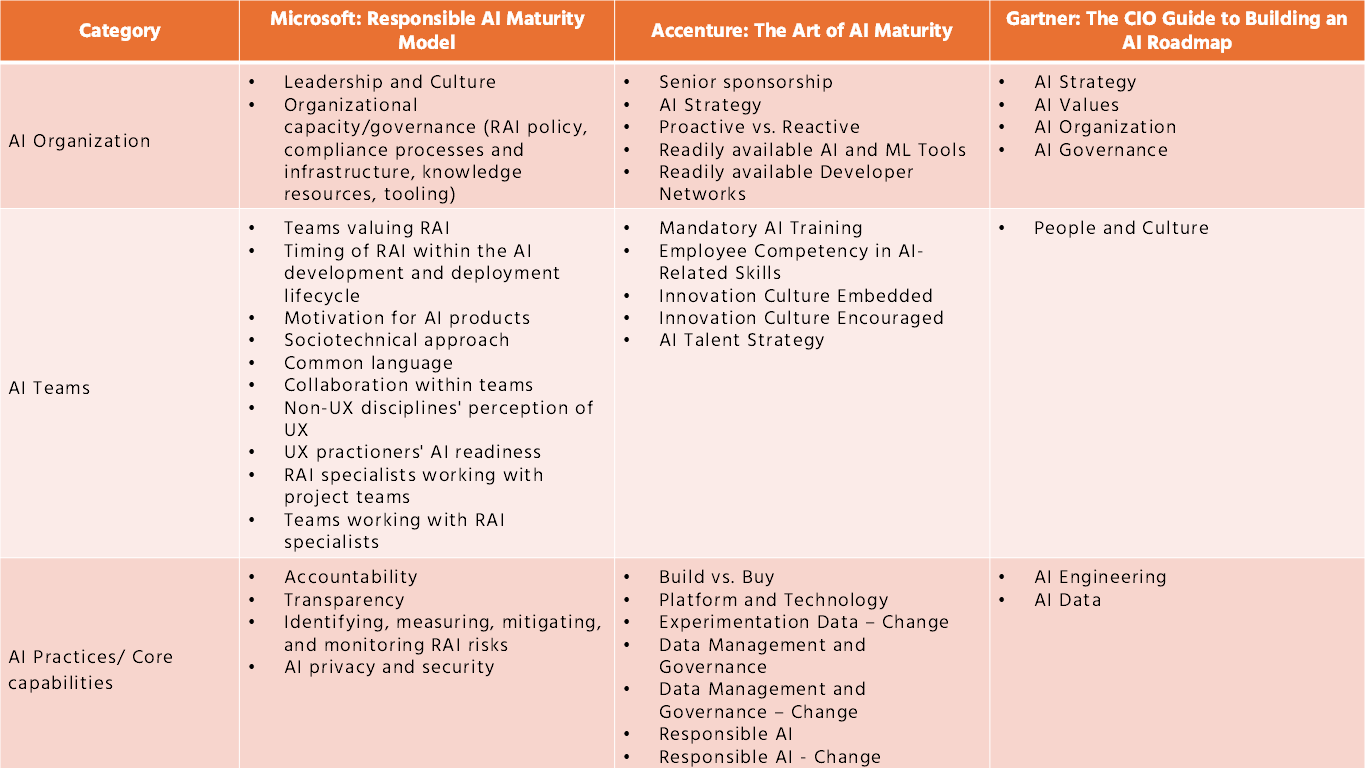

Lower-Level Maturity Capabilities

Table 2 expands the comparison by examining lower-level capabilities within each maturity dimension. It should be noted that Gartner does not disclose detailed lower-level capabilities, which limits direct comparison for that framework.

Table 2: The detailed comparison of AI maturity measurement dimensions.

Table 2 expands the comparison by examining lower-level capabilities within each maturity dimension. It should be noted that Gartner does not disclose detailed lower-level capabilities, which limits direct comparison for that framework.

The comparison leads to several observations.

For AI organization and governance, all frameworks emphasize leadership commitment and formal governance arrangements. Microsoft and Accenture place stronger emphasis on operational readiness through tools, processes, and embedded practices, while Gartner places relatively more weight on ethical principles and value alignment alongside organizational structures.

For AI teams, all frameworks recognize the importance of skills, collaboration, and cultural readiness. Microsoft provides the most granular view, explicitly linking responsible AI practices to development workflows and cross-disciplinary collaboration. Accenture focuses more strongly on formal training, talent strategies, and fostering an innovation-oriented culture. Gartner addresses people and culture at a higher level without detailing specific competency-building mechanisms.

Across all frameworks, AI practices and core capabilities emphasize the importance of robust operational practices. Microsoft focuses heavily on responsible AI governance and risk management. Accenture covers a broader operational scope, including technology platforms and data governance. Gartner groups these capabilities into engineering- and data-focused categories, offering a technically oriented but less detailed perspective.

Overall, Table 2 reinforces that industry frameworks converge on similar capability areas, even when their level of detail and emphasis differ.

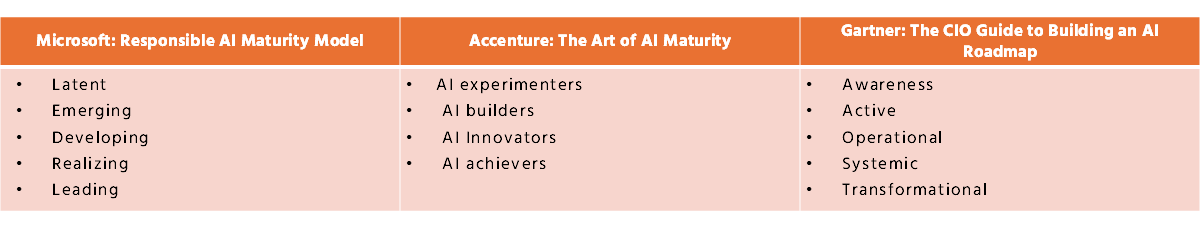

AI Maturity Levels

In addition to maturity dimensions, frameworks differ in how they define maturity levels. Table 3 compares the maturity levels proposed by Microsoft, Accenture, and Gartner.

Table 3: The comparison of AI maturity levels.

Microsoft and Gartner both define five maturity levels that reflect a staged progression from fragmented or ad hoc practices toward formalized, governed, and scalable AI capabilities. Although the naming and descriptions differ, both models assume incremental advancement and support roadmap-based planning.

Accenture’s approach differs in structure. Its maturity levels resemble a classification of organizational states rather than a strict progression model. While this approach still enables benchmarking, it places less emphasis on step-by-step capability evolution and more on positioning organizations relative to one another.

Despite these differences, all three maturity models ultimately assess the same underlying question: the extent to which AI-related capabilities are defined, embedded, governed, and consistently applied across the organization.

Summary

This analysis leads to the following conclusions:

- AI maturity measurement across industry frameworks is built on broadly comparable capability areas, even though frameworks differ in terminology and structure.

- Organizational and governance foundations consistently form the backbone of maturity assessment, complemented by dimensions related to people, culture, and operational AI practices.

- Industry frameworks diverge most clearly in their emphasis and level of detail, particularly in how explicitly they address responsible AI practices, operational readiness, and technical execution.

- Maturity levels are not defined consistently across frameworks; some models describe maturity as a staged progression of capabilities, while others use maturity levels primarily to classify organizational states.

The current status of the industry frameworks has impacts and implications on the organizations:

- These differences in maturity models and level definitions create practical challenges for organizations attempting to assess and communicate their AI maturity. When maturity dimensions are framed differently, assessment results may not be directly comparable across frameworks, making benchmarking and external communication more complex.

- Inconsistent interpretations of maturity levels can also affect internal decision-making. Organizations may struggle to translate maturity scores into concrete improvement actions, particularly when maturity models emphasize classification rather than progression. This can limit the usefulness of maturity assessments for roadmap development and investment prioritization.

- From a governance perspective, divergent maturity approaches increase the risk that maturity assessments become symbolic exercises rather than tools for managing AI risks and capabilities. Without a clear link between maturity results, governance ownership, and planning processes, organizations may find it difficult to demonstrate credible progress to regulators, auditors, or internal oversight bodies.

- These implications underline the need for a structured and intentional approach to organizing AI maturity measurement—one that connects industry models to internal governance, observable practices, and continuous improvement mechanisms.

Concluding perspective: organizing AI maturity measurement in practice

Organizations can follow a set of recommendations to guide how AI maturity is measured and applied in practice.

- The process starts with clarifying why AI maturity is being assessed

A clear purpose anchors maturity measurement. Organizations may aim to support regulatory dialogue, guide governance design, prioritize investments, or track progress over time. Clarifying this intent helps determine which maturity models are most relevant and how detailed the assessment needs to be. - A natural next step involves defining the scope of AI maturity being measured

AI maturity can span strategy, governance, data, technology, people, and operational practices. Establishing scope early helps avoid fragmented assessments and ensures maturity measurement reflects the organization’s actual AI footprint. - A thoughtful review of industry maturity models helps reveal suitable reference structures

Comparing maturity dimensions and progression logic across frameworks allows organizations to identify which models align with their governance structure, regulatory exposure, and operating context. This review also clarifies where adaptation is required. - The next step involves translating maturity dimensions into internal capability views

High-level maturity dimensions become actionable when mapped to internal capabilities, ownership, and practices. This translation connects abstract maturity concepts to concrete organizational responsibilities. - Organizations benefit from selecting or adapting maturity levels that reflect real behavior

Maturity levels are most effective when they describe observable practices rather than aspirational states. This supports credible self-assessment and avoids maturity scores becoming symbolic. - A further step involves linking maturity outcomes to governance and planning processes

Maturity results deliver value when they inform prioritization, roadmap development, and investment decisions. Explicit links to governance forums and planning cycles turn maturity measurement into a decision-support mechanism. - The approach naturally benefits from consistency and repeatability

Using a stable assessment structure over time allows organizations to track progress, identify trends, and demonstrate improvement. Consistency also supports internal and external communication. - Periodic reassessment ensures AI maturity measurement remains relevant

As AI use cases, risks, and regulatory expectations evolve, maturity models and criteria may need adjustment. Regular reassessment helps maintain alignment between ambition, capability, and control.