This article provides a review of the risk-based AI regulations.

In Part 1 of this series, we examined which institutions issue AI-related regulations and how various regulatory instruments interact. In this second part, we focus on risk-based regulations—how they classify AI systems by risk category and how they distinguish between the impact of AI and the underlying causes of risk. This perspective is essential for designing internal AI governance that is both compliant and practical across multiple jurisdictions.

AI System Classifications by Risk Category

Risk-based regulations classify AI systems into categories—typically minimal, limited, high, and unacceptable risk—based on their potential impact on individuals, organizations, society, or critical infrastructure. While the labels may look similar across jurisdictions, the criteria and examples assigned to each category differ, creating a fragmented compliance landscape for organizations operating globally.

Prohibited AI Systems: Systems Posing Unacceptable Risks

Most risk-based regulations identify a category of AI systems that pose unacceptable or excessive risk and are therefore prohibited.

The EU AI Act defines “unacceptable risk” systems as those used for social scoring and systems that rely on deceptive or exploitative techniques to materially distort a person’s behavior in a way that can cause harm. These systems are explicitly banned.

Similarly, Brazil’s proposed AI regulation introduces the concept of “excessive-risk AI systems.” These include systems that:

- Use subliminal techniques to induce behavior that harms individuals’ health or safety or contradicts the regulation’s principles

- Exploit vulnerabilities of specific groups, such as children or people with physical or mental disabilities, to induce harmful behavior

- Are implemented by the government for the purpose of social scoring

Such excessive-risk systems are prohibited outright, while AI systems that present lower risk remain allowed but regulated.

AI Systems Requiring Strong Oversight: High-Risk Systems

High-risk AI systems are not banned, but they are subject to the strictest regulatory obligations because they can significantly affect rights, access to essential services, or public safety.

The African Union framework defines high-risk AI systems as those with substantial environmental impact or a strong potential to perpetuate bias and discrimination. These systems must undergo comprehensive impact assessments, ethical reviews, and bias-mitigation measures and must comply with strong transparency and data protection safeguards.

The EU AI Act considers AI systems high risk if they are:

- Used as a safety component of a product already regulated under EU health and safety legislation, or

- Deployed in specific high-stakes domains, including education, employment, access to essential public and private services, law enforcement, migration, and the administration of justice.

- Brazil’s proposed legislation treats as high-risk AI systems those used in:

- Security devices in critical infrastructure (e.g., traffic control, water and electricity supply)

- Credit assessment and scoring

- Certain autonomous vehicles

- Healthcare applications

- Biometric identification

- Criminal investigation and public security

Under Canada’s Artificial Intelligence and Data Act (AIDA), “high-impact systems” include AI used to make decisions about employment (such as recruitment, hiring, promotion, or termination), to determine access to services (including type, cost, or prioritization), and to process biometric information for identification or for assessing behavior or mental state.

Across these frameworks, high-risk or high-impact AI systems are subject to enhanced oversight, including documentation, impact assessments, transparency obligations, bias mitigation, and clear accountability structures.

AI Systems Requiring Moderate Oversight: Systems with Limited or Medium Risk

Some regulations also define a middle tier of risk, where systems are permitted but require moderate oversight, primarily through transparency and monitoring.

The African Union regulatory framework identifies AI systems with limited or medium risk as those that affect labor markets or digital access. These systems require continuous monitoring, public consultation, and adaptive policy frameworks to address emerging challenges and their societal implications.

The EU AI Act defines limited-risk AI systems largely as those that interact directly with natural persons, such as chatbots, emotion recognition systems, biometric categorization tools, and AI systems that generate “deep fakes”—audio or visual content that appears authentic but is artificially generated or manipulated.

These systems are subject to transparency obligations. Deployers must inform users that they are interacting with an AI system or that content has been generated or altered by AI. This obligation may be waived when use is authorized by law for detecting, preventing, investigating, or prosecuting criminal offenses. It is limited in cases where AI-generated content is clearly part of artistic, creative, satirical, or fictional work, provided that disclosure does not undermine the presentation or context of the work.

Summary

Risk-based regulations classify AI systems into risk categories, typically including minimal, limited, high, and unacceptable risk.

Systems in the unacceptable or excessive risk category—such as social scoring or manipulative systems exploiting vulnerabilities—are prohibited in jurisdictions such as the EU and Brazil.

High-risk AI systems, particularly those affecting critical infrastructure, law enforcement, healthcare, financial services, and civil rights, are allowed but subject to strict requirements for impact assessment, transparency, data protection, and bias mitigation.

Medium- or limited-risk systems, such as chatbots, deep fakes, and tools influencing labor markets or digital access, require transparency and ongoing monitoring but are not regulated as heavily as high-risk systems.

Differences in which use cases fall into which category lead to compliance complexity for multinational organizations, underscoring the need for a structured internal approach to risk classification.

Risk-Based Regulations: Risk Classification

Beyond labeling AI as low, medium, high, or unacceptable risk, regulations also differ in how they define and structure risk itself. Some focus primarily on the impact of AI system outputs—what happens to individuals, organizations, or ecosystems. Others emphasize the causes of those impacts, such as biased data, lack of oversight, or technical vulnerabilities.

To better understand this landscape, it is useful to distinguish between impact-related risks and cause-related risks.

Impact-Related Risks

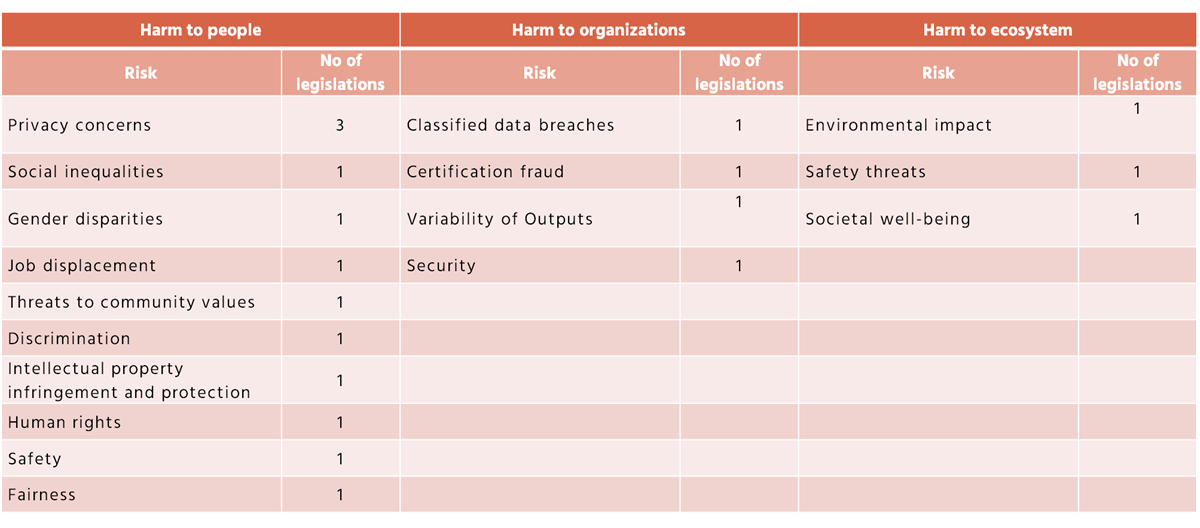

Using the risk classification from the U.S. NIST framework and comparative legal analyses (for example, by White & Case), impact-related risks can be grouped into three levels:

Individual-level impacts

These include privacy violations, discriminatory outcomes, job displacement, and erosion of community values. For example, biased hiring systems, intrusive surveillance tools, or scoring models that limit opportunities can directly harm individuals and communities.

Organizational-level impacts

At this level, risks include data breaches, intellectual property infringements, financial losses, and operational disruptions. AI failures or adversarial attacks can compromise system integrity, damage corporate reputation, and introduce legal liability.

Ecosystem-level impacts

These encompass broader societal and environmental effects, such as the environmental footprint of large-scale AI models, systemic biases that affect specific groups of people, or widespread misinformation that undermines public trust and democratic processes.

Table 1 in the original analysis consolidates these risks by mapping the number of pieces of legislation that address each type.

Table 1: Risk classification for risks identified in different regulations.

Overall, the picture illustrates the multidimensional nature of AI risk and the need for governance mechanisms that extend beyond narrow compliance to include ethical, operational, and societal perspectives.

Cause-Related Risks

Cause-related risks highlight the underlying drivers of harmful impacts. Several regulations explicitly identify technical and governance weaknesses that can lead to negative outcomes:

- Biases in data and models (African Union), which can encode historical or societal inequalities and systematically disadvantage certain groups

- Absence of human oversight (Israel), which reduces the ability to intervene, correct, or override AI-generated decisions

- Lack of explainability and transparency (Israel), making it difficult to understand how and why an AI system arrived at a particular output

- Inadequate disclosure of AI interactions (Israel), which prevents users from recognizing when they are engaging with an AI system

- Technical safety and security vulnerabilities (Israel), including susceptibility to failures or adversarial manipulation

- Unclear accountability and liability frameworks (Israel), which complicate assigning responsibility for AI outcomes

- Deepfakes and misrepresentation (Saudi Arabia), enabling the creation of convincing but false content

- Misinformation and hallucinated outputs, which undermine the reliability of information and public trust

These cause-related factors demonstrate that risk is not only about the consequences of AI outputs, but also about how systems are designed, built, deployed, and managed.

Summary

Risk-based regulations differentiate between impact-related risks (the consequences of AI outputs for individuals, organizations, and ecosystems) and cause-related risks (the technical and governance factors that create those impacts).

Impact-related risks include privacy breaches, discrimination, job loss, operational failures, and environmental or societal harm.

Cause-related risks include biased data and models, lack of human oversight, poor explainability, insufficient transparency, technical vulnerabilities, unclear accountability, and the creation or amplification of deepfakes and misinformation.

Effective AI governance must therefore address both layers: mitigating harmful outcomes and managing the root causes embedded in system design, data, and operational practices.

Action Recommendations for Organizations

To navigate this evolving landscape and translate risk-based regulations into practical governance, it is recommended that organizations take the following steps:

- Align AI use cases with external risk categories.

Organizations should begin by mapping their AI use cases against the risk categories defined in major jurisdictions such as the EU, Brazil, Canada, and the African Union. This comparison helps determine where stricter controls are required and where governance priorities should focus, particularly for high- and medium-risk systems. - Develop an internal risk taxonomy aligned with regulations.

It is advisable for organizations to establish a harmonized internal classification of AI risk that reflects both impact-related and cause-related factors. A consistent taxonomy used across business units promotes a shared understanding of risk exposure and regulatory expectations. - Integrate risk assessment throughout the AI lifecycle.

An organization should ensure that risk evaluation checkpoints are embedded in every stage of the AI lifecycle—from ideation and design to deployment and ongoing monitoring. Systems classified as high risk should trigger additional requirements, including impact assessments, enhanced documentation, or formal approval processes. - Reinforce governance of cause-related risks.

It is recommended that organizations strengthen technical and procedural controls addressing the underlying causes of AI risks. This includes improving data quality, mitigating bias, maintaining human oversight, ensuring explainability, and securing AI pipelines. Such measures should be integrated with broader data and IT governance practices. - Clarify roles, responsibilities, and accountability.

Each organization should define who is responsible for conducting risk assessments, approving high-risk use cases, and ensuring regulatory compliance. Clear accountability and close collaboration among data, AI, legal, risk, and business functions help maintain consistency across governance activities. - Monitor regulatory and risk developments continuously.

It is essential for organizations to maintain a structured process for tracking regulatory updates and evolving interpretations of AI risk. Regular reviews of internal taxonomies and controls ensure that governance frameworks remain aligned with changing external requirements.

By combining external regulatory classifications with internal risk and control frameworks, organizations can progress beyond one-time compliance exercises toward sustainable, risk-aware AI governance—balancing innovation with responsibility to people, businesses, and society.