This article discusses the challenges associated with AI principle-based regulations.

In Part 1, we outlined which institutions issue AI-related regulations and how different legislative instruments interact across jurisdictions.

Part 2 examined risk-based regulations, showing how they classify AI systems by risk level and distinguish between impact- and cause-related factors that shape governance obligations.

Part 3 focuses on principle-based regulations—a complementary approach that articulates high-level values and behavioral expectations rather than detailed technical rules.

In this article, we explore the principles most frequently referenced in national and international AI regulations, examine how they relate to risk-based approaches, and discuss their growing role in shaping practical, adaptive AI governance.

The Nature and Role of Principles in AI Regulation

Principle-based regulations provide a flexible, durable approach to AI oversight. Unlike prescriptive legislation that dictates specific methods or technologies, principles set out high-level expectations for responsible behavior that can remain relevant despite rapid innovation. They articulate what regulators expect AI systems to achieve—fairness, safety, transparency, accountability—without prescribing exactly how to achieve it.

A review of seventeen AI regulations issued by national governments, governmental institutions, and international bodies shows that principles and requirements often overlap. Some documents label “transparency” or “fairness” as principles, while others treat them as obligations. To avoid confusion, this analysis consolidates both under the single term principles—defined as fundamental expectations guiding the responsible design, use, and oversight of AI systems.

For comparison across jurisdictions, principles have been grouped into three overarching categories:

- AI system impact – focusing on outcomes that affect individuals, organizations, and society.

- AI lifecycle management – addressing how systems are designed, developed, tested, and monitored.

- AI global ecosystem – emphasizing international collaboration, awareness, and capacity building.

This structure highlights both convergence and diversity in how regulatory authorities interpret responsible AI.

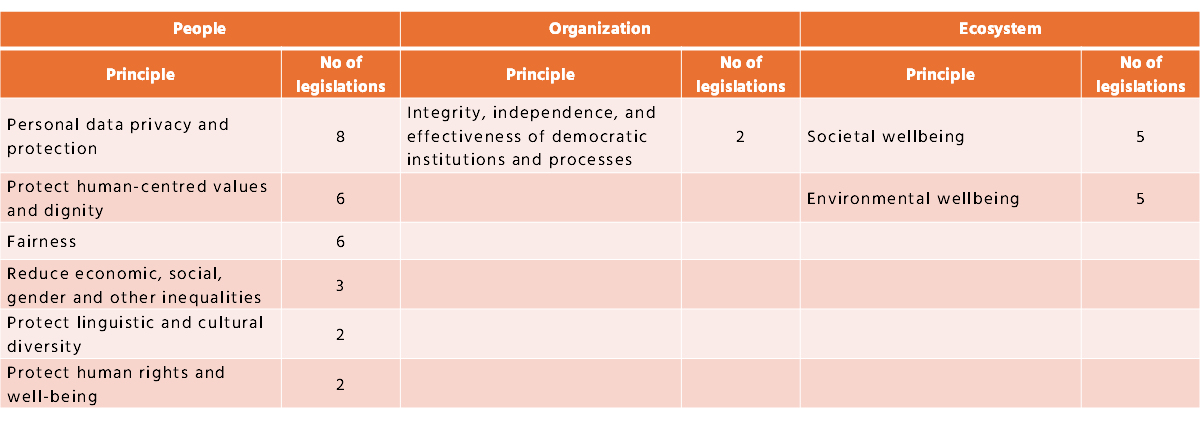

Principles Focused on AI System Impact

Principle-based regulations place significant emphasis on the impact of AI systems. These principles focus on how AI affects individuals, organizations, and the broader environment. Table 1 summarizes the principles identified across the analyzed regulations.

Table 1: Principles Related to AI system impact.

These principles reflect the ethical, social, and legal dimensions of AI deployment, emphasizing how systems influence people, institutional processes, and the environment.

Impact on Individuals

Across all the regulations analyzed, the protection of individuals appears most prominently. Principles such as privacy, fairness, and non-discrimination reflect a shared understanding that AI must not compromise personal rights or human dignity. The notion of fairness extends beyond algorithmic bias—it represents a broader commitment to equitable treatment and inclusion.

These individual-level principles align with the impact-related risks discussed in Part 2, including privacy violations, discrimination, and the erosion of autonomy.

Impact on Organizations

Regulations also introduce principles that address the internal implications of AI adoption. Integrity and independence guide organizations toward sound decision-making, while reliability and accountability promote process discipline and trust. Such principles address cause-related risks, such as governance failures, loss of control, and operational inconsistency.

By embedding these ideas into AI-related activities, regulators signal that organizational maturity—governance structures, accountability lines, and quality processes—is as critical to AI responsibility as technical safeguards.

Impact on the Broader Ecosystem

Ecosystem principles, though less common, are gaining attention. Sustainability, societal well-being, and responsible innovation extend the scope of regulation beyond direct users or developers. These principles reflect growing awareness that AI systems consume energy, shape markets, and influence collective behavior.

Their presence, even when aspirational, demonstrates the increasing integration of environmental and social sustainability goals into AI governance.

Alignment Between Risks and Principles

There is strong alignment between the principles and the risk categories identified earlier in this series.

- Privacy principles mitigate privacy risks.

- Fairness and non-discrimination principles counter social and ethical risks.

- Sustainability principles reflect environmental and long-term systemic risks.

This correspondence reinforces the idea that risk-based and principle-based regulations share the same foundation but operate at different levels, one analytical and operational, the other ethical and aspirational.

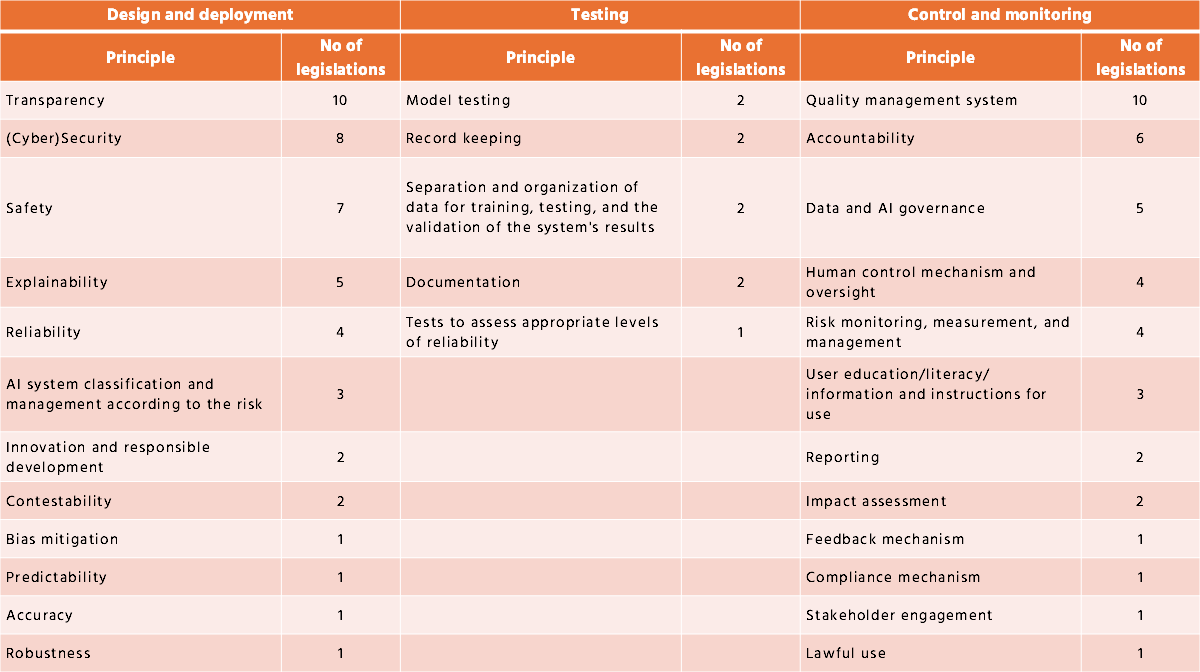

Principles for AI Lifecycle Management

While impact principles describe the desired outcomes of AI, lifecycle principles specify how these outcomes can be realized in practice. Across the seventeen regulations reviewed, twenty-nine principles were identified and distributed across four broad lifecycle stages: design and deployment, testing, and ongoing control and monitoring.

Table 2 summarizes the distribution of principles across these lifecycle stages. Each principle is accompanied by the number of regulatory frameworks in which it appears, indicated in parentheses, to reflect its relative prominence.

Table 2: Principles applied to AI lifecycle stages.

Design and Deployment Stage

The most frequently cited lifecycle principle is transparency. Regulations expect organizations to make AI systems’ capabilities, data sources, and decision logic understandable to both users and regulators. Transparency acts as a gateway principle—it enables the verification of other principles such as fairness, safety, and accountability.

Safety and security follow closely, emphasizing protection against harm to individuals, institutions, and data. The recurring mention of explainability and reliability reflects the need for trust: AI outcomes should not only be accurate but also interpretable and dependable.

Less common but still relevant are emerging principles such as bias mitigation, responsible innovation, and contestability. Their inclusion, even if infrequent, points to the evolution of regulatory thinking toward proactive management of social and ethical concerns.

Testing Stage

Few regulations describe the testing phase in detail, but those that do stress methodological rigor. The identified principles focus on maintaining documentation, ensuring traceability, and properly separating training and test data. These ensure that models can be evaluated objectively and replicated when needed. Such procedural safeguards translate ethical intent into verifiable practice.

Control and Monitoring Stage

In the post-deployment stage, quality management becomes a dominant theme. Ten regulations explicitly refer to quality management systems, signaling that oversight should be structured and continuous. Accountability, governance, and human oversight complement this principle by defining who remains responsible for AI outcomes and how oversight should function in practice.

Risk monitoring and management connect lifecycle principles back to risk-based regulation, emphasizing that compliance is not static but cyclical—AI systems must be observed, adjusted, and reevaluated as conditions change.

Collectively, these lifecycle principles illustrate the transition from compliance as documentation to compliance as active stewardship. They underscore that responsible AI depends not only on values but also on institutional capacity to implement them.

Principles Supporting the Global AI Ecosystem

Beyond individual organizations, several regulations emphasize principles that strengthen the global ecosystem in which AI develops and operates. These principles recognize that AI is inherently transnational, and its responsible advancement depends on shared infrastructure and knowledge.

Common ecosystem principles include:

- Development of AI-specific legal structures: Regulations encourage governments to create coordinated legislative environments that address emerging AI risks without stifling innovation.

- Public awareness and education: Many documents stress the need for societal literacy about AI’s benefits and challenges.

- Investment in safe AI development: Economic support for research, training, and ethical technology deployment is seen as a regulatory responsibility.

- Interoperable technical tools: The ability to share standards and mechanisms across borders helps align diverse regulatory systems.

- Information exchange and cooperation: Cross-border data, expertise, and experience sharing are viewed as essential for preventing regulatory fragmentation.

Together, these principles outline a vision of AI governance that extends beyond compliance toward collaboration. They reflect an understanding that ethical AI depends not only on organizational behavior but also on collective global effort.

Key Insights from the Comparative Review

The comparative analysis of seventeen AI regulations reveals that principle-based regulation is no longer a peripheral or aspirational layer—it has become a central mechanism for guiding responsible AI. Several consistent insights emerge across jurisdictions.

- Principles and requirements are deeply interlinked.

Many regulations use identical terms—such as transparency or accountability—to describe both principles and obligations. This overlap shows that principles often serve as the conceptual foundation for later concrete rules and compliance criteria. - Protection of individuals remains the universal cornerstone.

Despite regional and cultural differences, privacy, fairness, and respect for fundamental rights dominate the regulatory landscape. This shared focus confirms that AI governance, at its core, is built around safeguarding human dignity and equity. - Operational principles are gaining ground.

Transparency, safety, explainability, and quality management are increasingly treated as practical governance standards rather than abstract values. Their presence marks a shift from ethical declarations to measurable business processes embedded across the AI lifecycle. - Global cooperation is becoming an essential regulatory goal.

Several regulations now emphasize public awareness, interoperability, and information sharing. These ecosystem principles recognize that AI systems operate across borders, and trustworthy AI depends on coordinated international action.

Together, these trends demonstrate that principle-based and risk-based regulations are complementary. Principles articulate what responsible AI should achieve and why; risk-based mechanisms specify how and when it must be implemented. Combined, they create a balanced and adaptive regulatory architecture.

Recommendations for Organizations

Principle-based regulations provide valuable flexibility, yet they rely on interpretation to become effective in practice. The following recommendations outline ways organizations can translate high-level principles into sustainable governance approaches while remaining consistent with their regulatory environments.

- An organization should establish a shared internal understanding of key principles to ensure that terms such as fairness, transparency, and accountability are interpreted consistently across business, technical, and legal functions.

- It may be helpful for organizations to link each principle to specific AI lifecycle activities so that ethical expectations—such as transparency in design or accountability in monitoring—become embedded in day-to-day practices rather than treated as external obligations.

- An organization should align principles with its risk management processes by mapping them to impact- and cause-related risk categories. Such alignment helps create coherence between ethical aspirations and measurable outcomes.

- Organizations could consider applying principles as evaluation perspectives when assessing AI use cases. Viewing initiatives through lenses such as fairness, explainability, or sustainability often leads to more balanced decisions and broader stakeholder confidence.

- It is recommended that organizations maintain continuous oversight and human involvement to uphold accountability, quality management, and the capacity for intervention throughout the system’s lifecycle.

- An organization may benefit from engaging with the broader AI governance ecosystem through professional collaborations, shared technical standards, and knowledge exchanges that foster alignment with emerging international expectations.

Collectively, these actions illustrate how principle-based regulations can evolve from abstract ethical guidance into living governance practices. When consistently applied, they enable organizations to integrate societal values into their operations while strengthening trust in the development and use of AI.

The analysis of principle-based regulations completes the review of regulatory instruments issued by governmental and international authorities. Together with the insights from Parts 1 and 2, it provides a comprehensive view of how legislation defines the foundations of responsible AI. In the following article, the focus will shift from legally binding instruments to industry frameworks developed by professional and standard-setting bodies. These frameworks translate regulatory principles into structured methods, models, and practices that organizations can adopt to design and implement effective AI governance.