This article provides the review of the AI-regulating bodies.

Artificial intelligence is evolving faster than the rules meant to keep it in check. Around the world, governments, regulators, and industry bodies are setting standards for responsible AI—but they often speak very different languages.

This series, AI Regulations and Frameworks, brings clarity to that complexity. It distinguishes between two dimensions that are often blended together but serve very different purposes. Regulations are laws, rules, or formal orders issued by authorities that define what organizations must do to ensure AI is developed and used safely. Frameworks, in contrast, are guidance documents created by industry and professional organizations to help companies translate those requirements into practice.

Across several articles, we’ll explore and compare AI regulations from seventeen global and national authorities and frameworks published by eight leading industry bodies. We’ll look at how these documents define AI systems, structure compliance, and address risk and accountability.

For data and AI professionals, this series offers a practical roadmap—helping you understand not only what’s required, but also how to align your strategies and governance practices with the fast-evolving AI regulatory landscape.

This article—the first in the AI Regulations and Frameworks series—analyzes how AI-related regulations are structured and classified across regions, organizations, and legal systems.

Analyzing AI Regulations: Five Key Dimensions

To understand the current regulatory landscape, this study classifies AI-related regulations according to five dimensions:

- issuing body

- Scope of the regulation

- Legal status

- Definition of an AI system

- Compliance approach

The analysis is based on information available as of April 2025. This article will cover the first four topics.

Regulations by Type of Issuing Body

Two broad categories of authorities develop AI-related regulations: international organizations and national governments.

AI-Related Regulations per International Organization

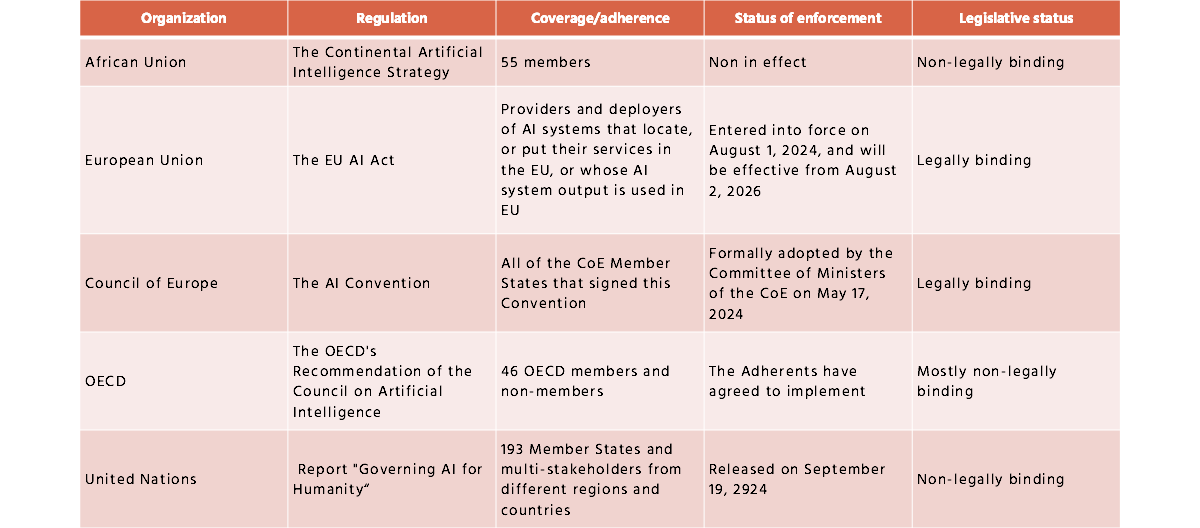

Five major international organizations have issued regulatory instruments addressing AI governance and ethics. Table 1 summarizes key characteristics of these regulations.

Table 1. AI regulations issued by international organizations.

The analysis reveals that these organizations differ significantly in scope, applicability, and enforceability under the law.

Some, like the EU Artificial Intelligence Act (EU AI Act), are legally binding and apply extraterritorially, affecting not only EU-based entities but also any organization conducting AI-related business within the European Union.

Others, such as the instruments developed by the African Union, Council of Europe, Organization for Economic Co-operation and Development (OECD), and the United Nations, operate primarily as non-legally binding frameworks or policy recommendations. At the same time, these initiatives promote global alignment; their voluntary nature, however, limits their direct enforceability.

This diversity of form creates a significant challenge for countries and enterprises trying to ensure compliance across jurisdictions. For example, EU member states must adhere to the mandatory provisions of the EU AI Act while also observing the AI Convention of the Council of Europe, which is typically non-binding. Managing obligations from both binding and voluntary instruments can lead to overlaps or even contradictions in policy implementation.

Country-Specific AI Regulations

Despite international efforts, many countries continue to issue their own AI-related regulations. This study reviews the national regulatory instruments of Australia, Brazil, Canada, China, India, Israel, Japan, Saudi Arabia, Singapore, South Korea, the United Kingdom, and the United States, as tracked by the AI Watch – Global Regulatory Tracker.

The following sections compare the scope, legal force, and definitional approaches of these national regulations.

Regulations by Scope

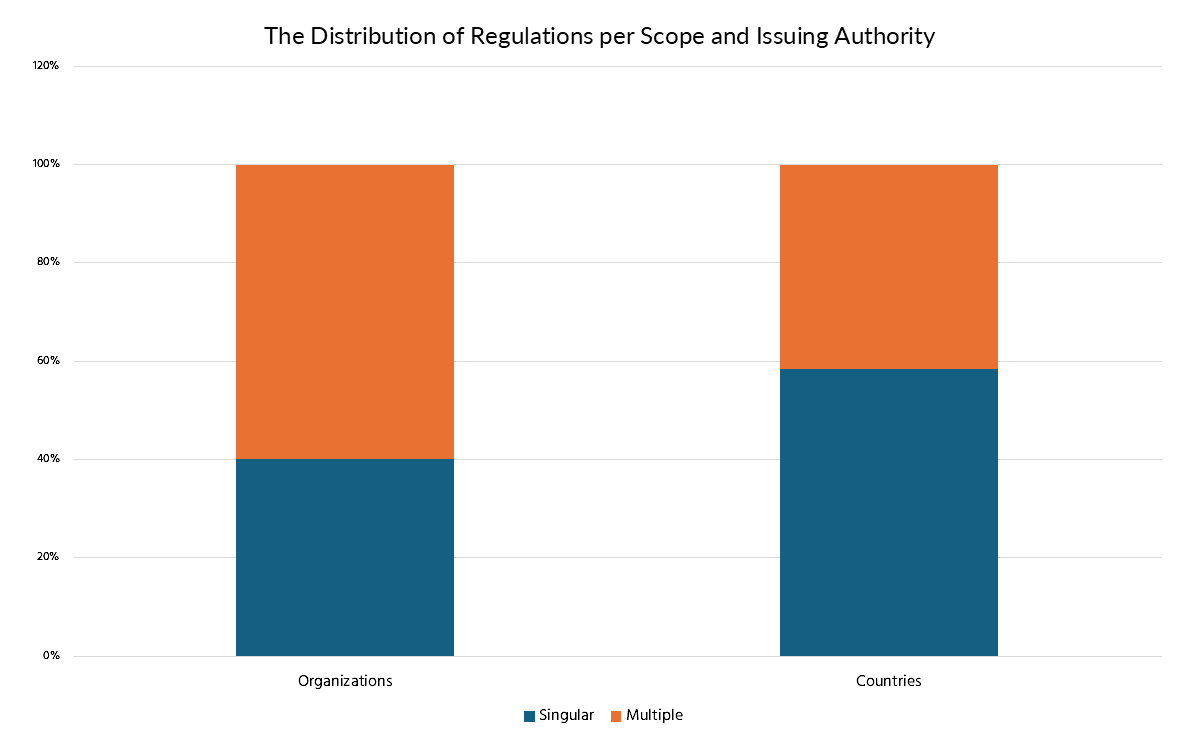

Some authorities issue a single comprehensive regulation, while others develop multiple instruments to address different aspects of AI, such as data protection, risk classification, or ethics. Figure 1 illustrates the distribution of AI-related regulations by scope and issuing authority.

Figure 1. Distribution of AI-related regulations by scope and issuing authority.

The analysis shows a balanced pattern.

Among international organizations, 40% have adopted a single, unified regulation, while 60% use multiple instruments.

Among national governments, 58% rely on a single regulation, while the rest use several documents to cover various dimensions of AI governance.

This diversity adds another layer of complexity for multinational organizations, which must navigate overlapping requirements from both international and domestic sources.

Regulations by Legal Status

The legal status of a regulation determines whether compliance is mandatory or voluntary.

Legally binding regulations are formal laws or directives enacted by authorities. Non-compliance can lead to fines, sanctions, or other legal consequences.

Non-legally binding (voluntary) instruments—such as recommendations, principles, or best practices—offer guidance without the force of law.

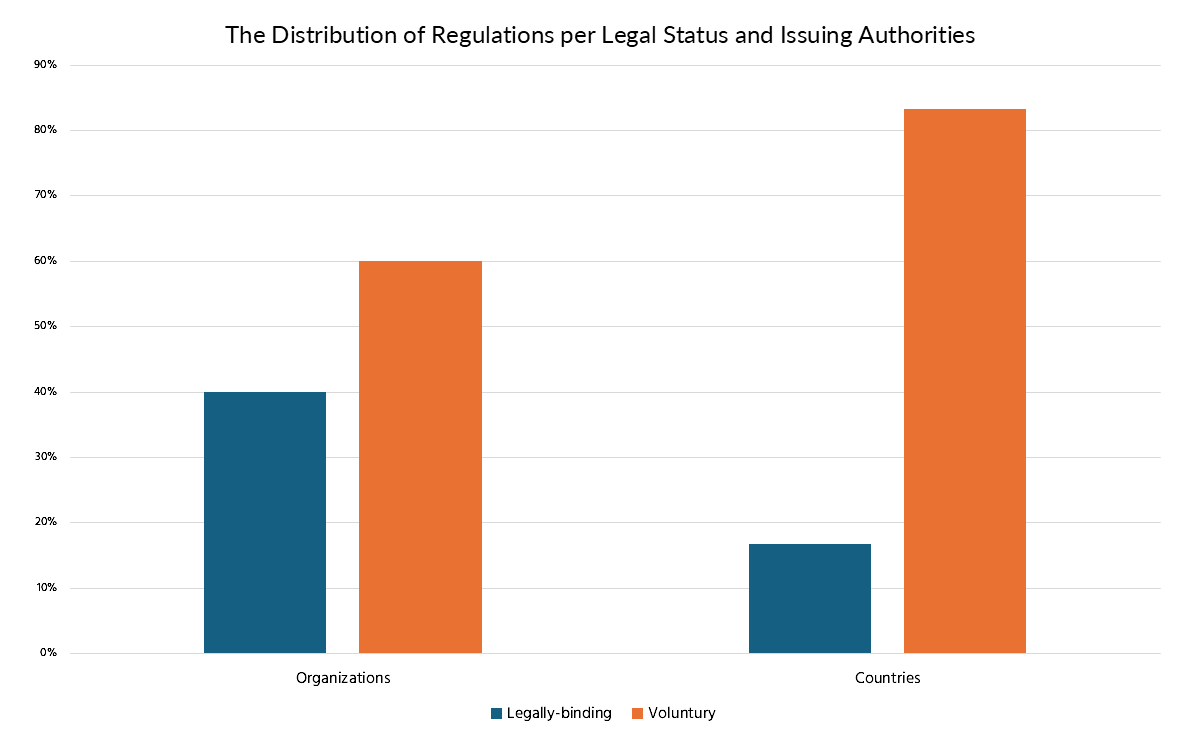

Figure 2 shows how AI-related regulations vary in their legal enforceability.

Figure 2. Distribution of regulations by legal status and issuing bodies.

According to the analysis, 40% of international organizations and only 17% of national authorities have issued legally binding AI regulations. For organizations, this means that while a growing number of rules exist, only a fraction currently carries legal consequences.

Still, voluntary instruments often influence how future binding laws are designed, making early alignment a strategic advantage.

The Definition of Artificial Intelligence in Regulations

One of the most debated topics in the regulatory landscape is how “artificial intelligence” itself is defined.

Two aspects are particularly revealing:

- The general approach to defining an AI system

- The introductory and descriptive language used in those definitions

Approaches to Defining an AI System

Three main approaches emerge across regulations:

- Providing a single, unified definition within the regulation.

- Offering multiple definitions, adapted to specific sectors or contexts.

- Omitting a definition entirely.

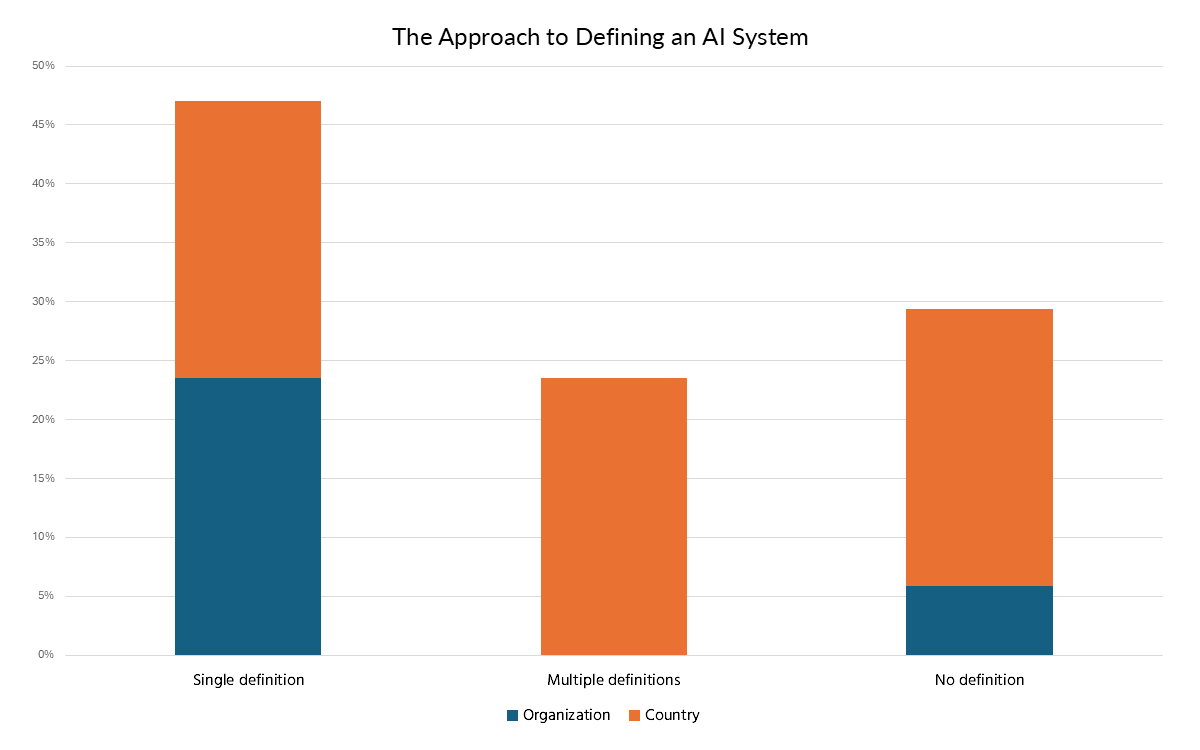

Figure 3 illustrates these approaches among the analyzed authorities.

Figure 3. Distribution of approaches to define an AI system.

Only 45% of the studied organizations and countries provide a single, formal definition of an AI system. The rest either present multiple versions or omit a definition altogether.

This lack of standardization makes it difficult for global enterprises to maintain consistency in AI compliance and governance programs. Without a shared understanding of what constitutes an AI system, obligations may vary widely across jurisdictions.

Differences in Terminology and Descriptive Language

Even when definitions exist, their wording and focus differ substantially.

About 80% of the analyzed regulations introduce the concept using the term system. Others use alternative openings, such as electronic representation or products and services.

Descriptive terms such as computer-based, machine-based, or engineered further shape the meaning—but often remain broad and open to interpretation.

Definitions also differ in emphasis: some focus on capabilities (learning, adapting, reasoning, sensing, interacting), while others stress outputs (prediction, recommendation, decision-making, content generation).

These differences reflect varying regulatory priorities—from emphasizing technical autonomy to focusing on the potential societal impact of AI systems. For organizations, this means every definition must be carefully analyzed and contextualized before it can be operationalized.

Implications for Compliance and Strategy

This analysis highlights one central truth: AI regulation is far from uniform.

Differences in scope, binding power, and definition shape how organizations must design and manage their AI governance frameworks.

While the EU AI Act sets a global benchmark for enforceable, risk-based regulation, other authorities rely on principles, voluntary codes, or guidance documents. This fragmented environment requires organizations to move beyond simple checklists and develop governance structures flexible enough to accommodate multiple interpretations of compliance.

Recommendations for Data and AI Professionals

To navigate this evolving and diverse regulatory landscape, data and AI professionals should consider the following actions:

- Map all applicable regulations — Identify which global, regional, and national AI regulations affect your organization, including voluntary instruments that may influence future compliance expectations.

- Monitor evolving definitions — Stay informed about how different jurisdictions define “AI systems” and align internal documentation accordingly.

- Adopt a layered compliance strategy — Combine adherence to legally binding laws with proactive implementation of voluntary standards to build trust and readiness.

- Integrate AI governance into data management — Leverage existing data governance capabilities (lineage, quality, metadata, and ethics) as foundations for AI accountability.

- Collaborate across disciplines — Ensure that legal, technical, and business stakeholders work together to interpret and operationalize regulatory requirements effectively.

In the following article, we will discuss regulations under the compliance approach—risk–based regulations.